Undergraduate Psychology degrees in the UK require the teaching of research methods and statistics. Usually, this is the module or course that gets a lot of hate for being dry (or hard). In order to actually understand research, I believe it may be the most important aspect of undergraduate psychology courses. In all fairness to the lecturers at the universities that I have attended, and I believe most others, it is made pretty clear that statistics are important. Of course we need a working understanding of statistics to understand and conduct research. Unfortunately, I believe that by somewhere around week 2 or 3 of running some new analysis that is not fully understood in SPSS, some of the motivation gets lost. This results in students lacking a full appreciation of the more important aspects of statistical analyses - interpreting statistics. Thankfully, this is where 'Improving your statistical inferences' comes in.

There is definitely improvement since my undergraduate. In the lab practicals that I have demonstrated in (and marked many reports from), the students seem to grasp the concept of interpreting statistics better than I did at that stage. Note: I was not number adverse, my strongest subject was always maths and there was plenty of statistics in A-level. These students even know to report effect sizes, which I had barely any comprehension of until my Masters. This is great progress, though judging from some of the reports I'm not completely convinced that these effect sizes have been interpreted or fully understood, other than knowing that they should be reported.

But, it is not the place of this post to rip on undergraduate statistics modules. I want to talk about the course "Improving your statistical inferences" taught by Daniel Lakens. In particular I want to highlight a few of the important lessons from this course that I wish I had discovered during my undergraduate. Side note: undergraduates, if you don't understand something that you think is important then it is also your responsibility to find that information - just because it is not in the lecture does not mean that it is not important, or even essential. On to two of the lessons.

Type 1 and Type 2 Errors

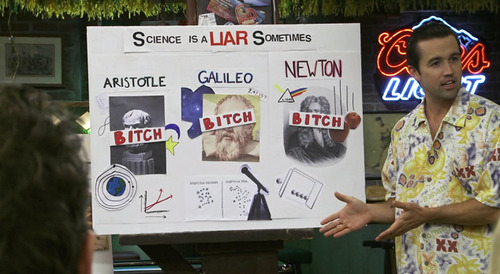

| |

| My wife didn't quite appreciate the reference. Any other it's always sunny fans out there? |

We all learned about type 1 and type 2 errors. What I now realise is that I did not quite appreciate how important they are. To recap, type 1 error (alpha) is the probability of finding a significant result when there is no real effect. Type 2 error (1 - power) is the probability that we find no significant result, even when there is a real effect. Or in the next picture...

Why is this important?

We know this, everybody is screaming. But, this underlies a key point in teaching of statistics.

In psychology we are trained that p < .05 is good, without complete appreciation for the importance of the actual effect in question. These lessons reinforce the notion that the effect size that is the important factor, rather than the p-value itself. This is of the utmost importance for those beginning their research journey, and I wish that I appreciated this during my undergraduate. Across the course, we learn about several methods to reduce these errors. Amongst other things, apriori methods such as upping your sample size help to control type 2 errors and apostiori methods such as controlling for multiple comparisons help to control type 1 errors.

P-curve analysis

p-curve analysis is a meta-analytic technique to explore distributions of p-values in the literature. It allows the analyst to draw inferences about the potential that a collection of statistical results contains p-hacking. Here is one question I did not know the answer to until taking this course. What p-value would we expect if there is no actual effect in an analysis? answer - any p-value, the distribution is uniform. So there is the same change of a p = .789 as p = .002 for example. Now, what about if there is a real effect? in this case our distribution shifts trending towards lower p-values (highly-significant or whichever terminology one prefers). If we plot this as a distribution we would expect something like the right hand graph in the figure below.

We may see a different pattern with a higher distribution of p-values at the high end, say .04-.05 or the "just-significant" line. This may be suggestive of p-hacking, optional stopping (running more participants until a significant result is found), amongst other things. See the left-hand graph in the image below. So, in short, if a literature is filled mainly with p-values at the high end of significance, e.g. lots of .04 or higher, then we might want to examine this research further to be more confident in the results.

|

Taken from Simonsohn et al.

http://www.haas.berkeley.edu/groups/online_marketing/facultyCV/papers/nelson_p-curve.pdf

|

The p-curve analysis lecture supports the view that there is nothing inherently special about p < .05, especially in the higher range of these values. Indeed, a high-proportion of these results within a research literature is suggestive that the effect may not be as robust as believed. Note however that p-curve analysis is a relatively new technique and cannot actually diagnose p-hacking etc. There is a more in depth discussion of p-curve analysis in the course.

About the course as a whole

The format of the course is engaging. The lectures are short, and include quick multi-choice checks of understanding. This was a nice touch for me in particular as it did lead me to revisit a few ideas straight away, rather than waiting on the exams to realise my error. The quizzes/exams are exactly what they need to be to ensure that those taking the course have a good overall understanding of the lessons. The practical aspect of the course is a stand-out aspect as it gives students the opportunity to engage with the analyses and simulate lots of data to really drive the lesson home. It may also be a nice way to expose people to R initially, without any pressure on learning the language. The final assignment requires students to pre-register a small experiment and report it. This was very useful to me, especially as the course will hopefully instill in everybody the benefits of registration and open-science. It is possible to complete each week with a commitment of 2-3 hours, depending on the topic, so the time commitment is not huge.

I hope that at the least this post is appreciated as a positive review and long-winded thank you to Prof. Lakens for the lessons in the course. More importantly, if it convinces one person in psychology to take the course and learn to better understand and make inferences from our and others statistical analyses, then the time spent writing this post has been well spent. Anybody interested, click here for the course homepage.

Any and all comments are appreciated on this first post as a return to blogging. Correct me if I have misquoted anything or misrepresented the information I have discussed.

No comments:

Post a Comment