Amnesty International, London held the third annual science meeting run by the mental health charity MQ. It saw many mental health researchers and professionals meet and discuss state of the art and groundbreaking research across many interdisciplinary domains. MQ's tag line is "Transforming mental health through research" and what I loved about this event in particular was the focus on the research that can and has been undertaken in order to tackle the growing challenge of mental health. MQ highlights the need for interdisciplinary and collaborative approaches, and science meetings or conferences such as this enable the discussions to happen in order to meet this goal. Through research is particularly why I was drawn to MQ.

Two days of talks, an extended poster session, networking opportunities, and an insightful panel discussion on "What good is a diagnosis?" rounded up the two-day line up. I had the opportunity to get feedback on my poster "A cognitive model of psychological resilience: current research and future directions" which thankfully has given me quite a lot to ponder, as well as some ideas for cognitive task designs. For this post I would like to highlight several take home messages that I especially took to heart during the meeting.

Targeting the mechanisms

Michelle Moulds gave a talk and led the discussion on repetitive negative thinking as a transdiagnostic process in mental illness. I was particularly glad as this aligned well with my current thinking (and background for an upcoming grant application). Repetitive negative thinking is common across disorders and is predictive of comorbidity. Therefore, the idea that interventions target repetitive negative thinking is timely and useful. In relation to my field (discussed more below), this suggests that cognitive interventions should target this underlying process, rather than attempting to address symptoms that lie further downstream. The discussion also led to the mention of some work that suggests that certain patterns of thought are promotive and some destructive, perhaps based on the content (e.g. positive versus negative content). Again, this is a useful distinction that resonates with my work; cognitive processes can be adaptive or maladaptive, depending on the context and content.

We need longitudinal research

I have made this point before, so I don't want to spend too much time on it here. Suffice to say that more longitudinal research is needed and in particular with a developmental focus, in order to understand mental illness. Although the cross-sectional and correlational research that has been undertaken is important, it needs to be situated in a developmental context. There are ethical issues with many potential experimental research designs (e.g. maltreating one group of children in order to compare to a protected group, or similar), however, this is where the interdisciplinary nature of MQ comes into play. Animal models, genetic, and epigenetic approaches are highly valuable, as are natural experiments such as the English and Romanian Adoptee study (link here) discussed by Professor Sonuga-Barke. My take home message from the varied and approaches discussed during the meeting is that there are are many ways in which vastly distinct disciplines can be integrated and provide complementary understanding of mental health. A caveat is that more longitudinal research is still needed in order to understand the development of mental ill-health, in order to develop preventative and resilience based approaches.

We need collaborative and integrative research

Touched on slightly above is that we need to collaborate and conduct multifaceted research in an interdisciplinary fashion. Animals and basic science help us understand the mechanisms underpinning mental health, while therapeutic intervention studies allow us to assess treatment options, and (epi)genetic studies give an additional glimpse into the underpinnings of mental health.

As it was brilliantly stated during the meeting (and ad-libbed here), it starts with meetings and conferences such as this, which facilitate the discussion between disciplines to share our knowledge and expertise. Ultimately, we are all interested in the same thing, transforming mental health through research. From this community will rise an exceptional body of research. Again, I love the tag line through research and think that it is what we need more of (as well as the funding and support to do so).

More research from a cognitive-experimental information-processing approach is needed

Or, perhaps it might be better to say that this approach should be better represented and communicated. I was excited to meet several researchers that are doing some great work in this field, including Ernst Koster and Colette Hirsch. My supervisor, Elaine Fox is also well known in this field. The cognitive-experimental approach borders with cognitive neuroscience and may provide a linkage between biological and neurological measures, and mental health related behaviour. We typically use computerised tasks to investigate differential responses to emotionally salient stimuli using a range of outcomes, including; behavioural (response times and accuracy rates), psychophysiological (e.g. eye tracking, heart rate), and neural (EEG, fMRI and so on), amongst others. These approaches have provided strong evidence for the causal contribution of automatic attentional biases favouring threatening stimuli towards anxiety symptoms (). In addition, this approach offers a number of paradigms designed to train particular biases and executive control processes. In one discussion during the MQ meeting it was commented that working memory training may help individuals with ADHD-related working memory impairments, but unfortunately there aren't such paradigms available. In fact, there are several lines of research tackling just that, and with some success. For example, improvements in working memory capacity have been shown to improve trait anxiety (Sari, Koster, Pourtois, & Derakshan, 2016). There are a growing number of studies from Derakshan and Koster which highlight the benefits of executive control training on mental health symptomology. Building on this existing research with novel paradigms and integrated methodologies (e.g. incorporating EEG and eye-tracking measures) has the potential to extend our understanding of the cognitive basis of mental health and resilience to mental illness. In addition, cognitive interventions may prove to be a useful clinical aid or preventative tool transforming mental health.

Final thoughts

The MQ science meeting was successful in bringing together experts from around the globe, from a multitude of disciplines, with the intention to foster collaborative and interdisciplinary mental health research, It was amazing to see such a range of science brought to the table to tackle the growing issue of mental health, both on a societal and individual level. I am looking forward to the next meeting, but in the mean time, I look forward to the funding call announcements and submitting my own proposals to fulfil the gap in current research investigating the cognitive-affective aspect of repetitive negative thinking and mental health in adolescence, and the development of resilience in this particularly vulnerable period.

I talk about research from the perspective of a DPhil student / ECR. Look forward to posts about research on cognitive biases, emotion, and resilience, as well as rants about whatever is on my mind about research life

Tuesday, 7 February 2017

Monday, 6 February 2017

A rebuttal: What does it mean to be a successful academic? And how to not suck at achieving it

I came across this blog post on twitter a few days ago titled "What Does It Mean to Be a Successful Academic? And How Not to Suck at Achieving It". Sounds great, and as a junior academic crawling to the end of my PhD looking (and hoping) for that elusive postdoc position, it felt like a timely read. The post has been shared a bit and does have some positive messages that I agree with. I confess however, the more I read the more the post failed to resonate with me, so here goes with trying to reconcile that. Note: I am trying to be balanced, but these issues are hitting home.

The first message is to enjoy the work, which I wholeheartedly agree with. The example of slaving away 80-90 hours a week to get that perfect paper or self-nominated award, etc, sounds extremely unappealing. I also like the mindset that what defines success is a personal thing and driving to be "the best" in a field isn't necessarily healthy (nor conducive to good science, which is the marker of success that I would like to drive towards).

The question, is it worth it? is raised and it is an important side of the coin to balance. While not mentioned explicitly, the theme of life first and academic achievement second agrees with my mindset. One Postdoc in my research lab is honest about this and conveys the refreshing attitude that while she loves her job and research, if and when necessary she will leave academia she will do so and be happy about it. Life first, academia second. I think that this is the way it should be.

Where I think that the message of the post was derailed for me is the mention of one of the benefits of tenure as not having to worry about having the most publications or highest impact factor etc. This is perhaps where the idea of personal achievement comes in. My idea of achievement at this stage is to do enough to stay in academia without an employment gap once my studentship funding runs dry. This introduces the thing that the post fails to capture, competition. At the early researcher stage, you are fighting for these positions tooth and nail. That means if you can get an extra publication, publish in higher impact journals (which I realise is nearly entirely BS, but is still sadly important), acquire research funding, and so on, then you pretty much have to play that game just to get the position. As a junior academic you are also susceptible to Departmental/University procedures which can leave you at a disadvantage (or at least not receiving full credit for grant applications due to being too junior, as I found to my own dismay recently). When competition and a lack of positions drives what is the most basic aspect to being an academic, having an academic position, the whole notion of the post fails. We junior academics cannot not worry about it and be content with a reasonable amount of output but not enough to stand out from the crowd. This left me with a take home message (completely unintended by the author I am sure), that having tenure means that you can stop worrying and enjoy life. But, what about everybody else?

Recently we had a discussion with our director of graduate studies who gave the average completion time of PhDs in our department at 44 months. For those like me lucky enough to have funding, it will run out after 3 years. So in terms of being successful and not sucking at achieving it, the best case scenario is that PhD candidates are finding extra funding to finish their PhDs. Realistically, they are likely being strategic and submitting their theses at a later date in order to get out those few extra papers and to get the extra time a few years later when applying for fellowships. Worst case scenario, it means that too many PhD candidates are paying their way through the latter stages in order to be competitive in this under-funded environment.

I'll try to bring this full circle and my apologies for the rant. Again, given present worries about employment, the post hit home. The importance of personal context, desired quality of life, and definition of success are stressed as key factors to balance in this equation. I agree that these are factors to balance in order to have a fulfilling life. However, when your definition of success is actually being able to stay in academia (or get into academia in the first place) then the milestone moves too far away from that set in that particular post. It all comes down to personal circumstance, goals, and life balance. In my case, without the benefit of tenure, the achievements that may be seen as arbitrary may also be the very ones that enable me to do what I love and stay in research.

The first message is to enjoy the work, which I wholeheartedly agree with. The example of slaving away 80-90 hours a week to get that perfect paper or self-nominated award, etc, sounds extremely unappealing. I also like the mindset that what defines success is a personal thing and driving to be "the best" in a field isn't necessarily healthy (nor conducive to good science, which is the marker of success that I would like to drive towards).

The question, is it worth it? is raised and it is an important side of the coin to balance. While not mentioned explicitly, the theme of life first and academic achievement second agrees with my mindset. One Postdoc in my research lab is honest about this and conveys the refreshing attitude that while she loves her job and research, if and when necessary she will leave academia she will do so and be happy about it. Life first, academia second. I think that this is the way it should be.

Where I think that the message of the post was derailed for me is the mention of one of the benefits of tenure as not having to worry about having the most publications or highest impact factor etc. This is perhaps where the idea of personal achievement comes in. My idea of achievement at this stage is to do enough to stay in academia without an employment gap once my studentship funding runs dry. This introduces the thing that the post fails to capture, competition. At the early researcher stage, you are fighting for these positions tooth and nail. That means if you can get an extra publication, publish in higher impact journals (which I realise is nearly entirely BS, but is still sadly important), acquire research funding, and so on, then you pretty much have to play that game just to get the position. As a junior academic you are also susceptible to Departmental/University procedures which can leave you at a disadvantage (or at least not receiving full credit for grant applications due to being too junior, as I found to my own dismay recently). When competition and a lack of positions drives what is the most basic aspect to being an academic, having an academic position, the whole notion of the post fails. We junior academics cannot not worry about it and be content with a reasonable amount of output but not enough to stand out from the crowd. This left me with a take home message (completely unintended by the author I am sure), that having tenure means that you can stop worrying and enjoy life. But, what about everybody else?

Recently we had a discussion with our director of graduate studies who gave the average completion time of PhDs in our department at 44 months. For those like me lucky enough to have funding, it will run out after 3 years. So in terms of being successful and not sucking at achieving it, the best case scenario is that PhD candidates are finding extra funding to finish their PhDs. Realistically, they are likely being strategic and submitting their theses at a later date in order to get out those few extra papers and to get the extra time a few years later when applying for fellowships. Worst case scenario, it means that too many PhD candidates are paying their way through the latter stages in order to be competitive in this under-funded environment.

I'll try to bring this full circle and my apologies for the rant. Again, given present worries about employment, the post hit home. The importance of personal context, desired quality of life, and definition of success are stressed as key factors to balance in this equation. I agree that these are factors to balance in order to have a fulfilling life. However, when your definition of success is actually being able to stay in academia (or get into academia in the first place) then the milestone moves too far away from that set in that particular post. It all comes down to personal circumstance, goals, and life balance. In my case, without the benefit of tenure, the achievements that may be seen as arbitrary may also be the very ones that enable me to do what I love and stay in research.

Tuesday, 31 January 2017

A cognitive model of psychological resilience - current thinking and future directions

When our recent paper was made open access in the Journal of Experimental Psychopathology it got me thinking about what was next for the line of research that we discussed in the paper. "A cognitive model of psychological resilience" (available here or on my Researchgate here) was my attempt to wrestle with two research domains which have potential for an integrated approach to psychological resilience and wellbeing. Separately, the cognitive-experimental approach to emotion dysfunction investigates the biased cognitive processes influencing mental ill-health, whereas, positive-psychology approaches (such as resilience approaches and positive mental health continua) inform our understanding of what it is to be healthy. While there is some cross over in these fields, for me it was not sufficient to really investigate the cognitive mechanisms of resilience, well-being and positive mental health.

As this is the general topic of my DPhil, it left a bit of a gap to fill. My initial attempt to do so involved several measures of cognitive bias and self-reports of positive mental health and resilience. This is exactly the approach that we advise against in our paper, although we do acknowledge several benefits. The main rationale is that resilience is something that is demonstrated, rather than reported. The majority of the prior research that I came across attempting to investigate the cognitive basis of psychological resilience took a similar approach, which led me to become increasingly unsatisfied with this approach.

On the flip side, the gap between these fields was further suggested to me as I read the resilience and positive mental health literature. There was a consistent lack of cognitive methods used. Or, when cognitive aspects were mentioned they typically referred to self-reported measures or the more conscious and complex biases known from social psychology, rather than the automatic selective biases that I was interested in.

I presented this paper in the form of posters and oral presentations to both audiences and ended up with very different responses from each. This first hand experience gave me more of an appreciation for the rift between the two fields and even different understandings of the terminology used. I now begin these presentations with a quick, audience specific summary, with the aim to ensure that whichever group I talk to really "gets" the purpose of our cognitive model of resilience.

Back to the present and I will be presenting a poster of our model on Thursday at the MQ Science Meeting. This seemed a perfect opportunity to do a bit more thinking about some ongoing research that gives support to the model and future research directions. The rest of this post is intended to be a more in-depth version of the poster that I will present. In part, to flesh out the material within the poster, but also to ensure that I get more of this thought process down in a much more usable form than a few scribbled notes as I create the poster. Here goes...

In our paper, we noted that certain biases thought to be detrimental (e.g. attention bias towards threat) have adaptive roles in certain circumstances. Therefore, the ability to flexibly switch between strategies must be important to promote adaptive responses across multiple circumstances and life events. However, flexibility is not enough, some amount of rigidity or inflexibility is also called for when the current strategy is effective or will be more effective in the long term, for example. Therefore, we hypothesised the presence of an overarching system that guides the flexible and directed application of cognitive processing strategies dependent on the present circumstances, future orientated goals, affective states, and is able to integrate with prior knowledge and experience in order to adaptively align information processing strategies. We decided to term this the mapping system and went on in the paper to detail how this system might be used to guide future research in developing more detailed cognitive accounts of psychological resilience.

In a novel task assessing the alignment of attentional bias (developed by Dr Lies Notebaert), it was found that participants successfully aligned their processing biases to the blocked circumstances. e.g. participants more accurately attended to controllable threats and ignored uncontrollable threats. This suggests that healthy individuals do successfully align or map cognitive bias to dynamically respond to differing circumstances, in this case the control-ability of potentially threat-related cues. Current follow-up research is investigating whether this alignment is impaired in anxious individuals.

Ongoing research in the OCEAN lab has investigated psychological flexibility in relation to resilience. Task-switching measures conceptually capture the nature of psychological flexibility as increased capacity to switch between task sets. With a more specific focus on emotional health these paradigms have been adapted to include emotional components. In one such task, participants respond to emotional and neutral faces, based on whether that face is the odd-one-out by one of several rules. Therefore, task switching can be examined when emotion is the focus of the switch, and also in cases where emotion is present, but irrelevant to the current task. In another task, working memory capacity is incorporated with emotional and non-emotional internal set-switches in order to examine this task-switching capability when it is performed internally, rather than determined by trial cues. At this stage the analyses preliminary, but potentially indicate that trait-resilience is influenced by task-switching capability in the presence of emotion.

Much of the following trains of thought come from an upcoming grant application which has taken up most of my thinking time for the past few months. Understandably, these are areas of research that I believe should be addressed to further our understanding of the cognitive basis of promoting psychological resilience to emotion dysfunction and the development of mental ill-health.

Developmental approaches: It is almost too common an observation that we need longitudinal and developmental approaches to mental health research. This is especially important with a cognitive approach as it is largely missing from the literature, and even less so with adolescent populations. This is unfortunate given that adolescence is a period of emotional vulnerability and that many cases of adult mental ill-health developed from adolescent emotional disorders. Thus, more research is needed taking a longitudinal approach. Of particular interest in this field would be employing large cohorts of adolescents and following them up regularly to investigate the influence of developmental changes in cognitive processing in predicting future emotional pathology and resilience to the development of such pathology.

Repetitive Negative Thinking induction procedures: worry and rumination take up valuable cognitive resources and are a contributing characteristic of many emotional disorders. Examining this maladaptive thinking style goes beyond specific diagnoses (of which there is a high rate of co-morbidity anyway). Additionally, these states can be experimentally induced which gives us the ability to directly examine the extent to which these processing styles impact processing capability and any knock-on effects on emotional information processing.

Cognitive-affective flexibility training: To examine the causal influence of a particular process on an outcome you must modify the process in question. In the cognitive-experimental approach to emotion dysfunction, this typically comes in the form of cognitive bias modification. If the modification procedure is successful in changing the process in question, then we can reasonably address the question of whether that process causally influences our desired outcome, for example tendency or susceptibility to engage in repetitive negative thinking. There has been research aimed at improving executive functioning with promising results, however, training flexibility in the context of emotion remains to be seen. Longitudinal designs can be used to examine whether these short-term transient changes or changes resulting from longer training programs go on to influence vulnerability factors and potentially boost resilience to the development of emotional disorders.

A cognitive approach to psychological resilience offers many benefits and I hope that our model will serve as a catalyst for research in this area. I've briefly covered several ongoing and planned research projects with the aim to highlight how our model may be used to integrate two important fields of mental health research. In the future I will post an update on these projects and what they have taught us. Fingers crossed that we will also get funding to pursue some of the important research that is vitally needed to investigate cognitive approaches to improving adolescent psychological resilience. In the mean time, please do have a read of our paper, and I am looking forward to presenting the poster version of this post at the MQ Science meeting, which I'll also make available on my Researchgate page

As this is the general topic of my DPhil, it left a bit of a gap to fill. My initial attempt to do so involved several measures of cognitive bias and self-reports of positive mental health and resilience. This is exactly the approach that we advise against in our paper, although we do acknowledge several benefits. The main rationale is that resilience is something that is demonstrated, rather than reported. The majority of the prior research that I came across attempting to investigate the cognitive basis of psychological resilience took a similar approach, which led me to become increasingly unsatisfied with this approach.

On the flip side, the gap between these fields was further suggested to me as I read the resilience and positive mental health literature. There was a consistent lack of cognitive methods used. Or, when cognitive aspects were mentioned they typically referred to self-reported measures or the more conscious and complex biases known from social psychology, rather than the automatic selective biases that I was interested in.

I presented this paper in the form of posters and oral presentations to both audiences and ended up with very different responses from each. This first hand experience gave me more of an appreciation for the rift between the two fields and even different understandings of the terminology used. I now begin these presentations with a quick, audience specific summary, with the aim to ensure that whichever group I talk to really "gets" the purpose of our cognitive model of resilience.

Back to the present and I will be presenting a poster of our model on Thursday at the MQ Science Meeting. This seemed a perfect opportunity to do a bit more thinking about some ongoing research that gives support to the model and future research directions. The rest of this post is intended to be a more in-depth version of the poster that I will present. In part, to flesh out the material within the poster, but also to ensure that I get more of this thought process down in a much more usable form than a few scribbled notes as I create the poster. Here goes...

A cognitive model of psychological resilience

Recent and ongoing research

Ongoing research in the OCEAN lab has investigated psychological flexibility in relation to resilience. Task-switching measures conceptually capture the nature of psychological flexibility as increased capacity to switch between task sets. With a more specific focus on emotional health these paradigms have been adapted to include emotional components. In one such task, participants respond to emotional and neutral faces, based on whether that face is the odd-one-out by one of several rules. Therefore, task switching can be examined when emotion is the focus of the switch, and also in cases where emotion is present, but irrelevant to the current task. In another task, working memory capacity is incorporated with emotional and non-emotional internal set-switches in order to examine this task-switching capability when it is performed internally, rather than determined by trial cues. At this stage the analyses preliminary, but potentially indicate that trait-resilience is influenced by task-switching capability in the presence of emotion.

Future research directions

Developmental approaches: It is almost too common an observation that we need longitudinal and developmental approaches to mental health research. This is especially important with a cognitive approach as it is largely missing from the literature, and even less so with adolescent populations. This is unfortunate given that adolescence is a period of emotional vulnerability and that many cases of adult mental ill-health developed from adolescent emotional disorders. Thus, more research is needed taking a longitudinal approach. Of particular interest in this field would be employing large cohorts of adolescents and following them up regularly to investigate the influence of developmental changes in cognitive processing in predicting future emotional pathology and resilience to the development of such pathology.

Repetitive Negative Thinking induction procedures: worry and rumination take up valuable cognitive resources and are a contributing characteristic of many emotional disorders. Examining this maladaptive thinking style goes beyond specific diagnoses (of which there is a high rate of co-morbidity anyway). Additionally, these states can be experimentally induced which gives us the ability to directly examine the extent to which these processing styles impact processing capability and any knock-on effects on emotional information processing.

Cognitive-affective flexibility training: To examine the causal influence of a particular process on an outcome you must modify the process in question. In the cognitive-experimental approach to emotion dysfunction, this typically comes in the form of cognitive bias modification. If the modification procedure is successful in changing the process in question, then we can reasonably address the question of whether that process causally influences our desired outcome, for example tendency or susceptibility to engage in repetitive negative thinking. There has been research aimed at improving executive functioning with promising results, however, training flexibility in the context of emotion remains to be seen. Longitudinal designs can be used to examine whether these short-term transient changes or changes resulting from longer training programs go on to influence vulnerability factors and potentially boost resilience to the development of emotional disorders.

Some conclusions

A cognitive approach to psychological resilience offers many benefits and I hope that our model will serve as a catalyst for research in this area. I've briefly covered several ongoing and planned research projects with the aim to highlight how our model may be used to integrate two important fields of mental health research. In the future I will post an update on these projects and what they have taught us. Fingers crossed that we will also get funding to pursue some of the important research that is vitally needed to investigate cognitive approaches to improving adolescent psychological resilience. In the mean time, please do have a read of our paper, and I am looking forward to presenting the poster version of this post at the MQ Science meeting, which I'll also make available on my Researchgate page

Tuesday, 3 January 2017

Improving your statistical inferences from Daniel Lakens - a review

The key message I remember from undergraduate statistics modules is that you want your p value to be less than .05. In December I joined Daniel Lakens on his "Improving your statistical inferences" online course (free, unless you would like to buy the certificate). It became glaringly obvious that my ability to actually interpret statistics was extremely limited during, and even after, my undergraduate degree. In this review, I want to highlight a few of the lessons I gained from this course, and why everybody should complete it (especially current undergraduate and postgraduate students).

Undergraduate Psychology degrees in the UK require the teaching of research methods and statistics. Usually, this is the module or course that gets a lot of hate for being dry (or hard). In order to actually understand research, I believe it may be the most important aspect of undergraduate psychology courses. In all fairness to the lecturers at the universities that I have attended, and I believe most others, it is made pretty clear that statistics are important. Of course we need a working understanding of statistics to understand and conduct research. Unfortunately, I believe that by somewhere around week 2 or 3 of running some new analysis that is not fully understood in SPSS, some of the motivation gets lost. This results in students lacking a full appreciation of the more important aspects of statistical analyses - interpreting statistics. Thankfully, this is where 'Improving your statistical inferences' comes in.

There is definitely improvement since my undergraduate. In the lab practicals that I have demonstrated in (and marked many reports from), the students seem to grasp the concept of interpreting statistics better than I did at that stage. Note: I was not number adverse, my strongest subject was always maths and there was plenty of statistics in A-level. These students even know to report effect sizes, which I had barely any comprehension of until my Masters. This is great progress, though judging from some of the reports I'm not completely convinced that these effect sizes have been interpreted or fully understood, other than knowing that they should be reported.

But, it is not the place of this post to rip on undergraduate statistics modules. I want to talk about the course "Improving your statistical inferences" taught by Daniel Lakens. In particular I want to highlight a few of the important lessons from this course that I wish I had discovered during my undergraduate. Side note: undergraduates, if you don't understand something that you think is important then it is also your responsibility to find that information - just because it is not in the lecture does not mean that it is not important, or even essential. On to two of the lessons.

Type 1 and Type 2 Errors

We all learned about type 1 and type 2 errors. What I now realise is that I did not quite appreciate how important they are. To recap, type 1 error (alpha) is the probability of finding a significant result when there is no real effect. Type 2 error (1 - power) is the probability that we find no significant result, even when there is a real effect. Or in the next picture...

Why is this important?

We know this, everybody is screaming. But, this underlies a key point in teaching of statistics.

In psychology we are trained that p < .05 is good, without complete appreciation for the importance of the actual effect in question. These lessons reinforce the notion that the effect size that is the important factor, rather than the p-value itself. This is of the utmost importance for those beginning their research journey, and I wish that I appreciated this during my undergraduate. Across the course, we learn about several methods to reduce these errors. Amongst other things, apriori methods such as upping your sample size help to control type 2 errors and apostiori methods such as controlling for multiple comparisons help to control type 1 errors.

P-curve analysis

p-curve analysis is a meta-analytic technique to explore distributions of p-values in the literature. It allows the analyst to draw inferences about the potential that a collection of statistical results contains p-hacking. Here is one question I did not know the answer to until taking this course. What p-value would we expect if there is no actual effect in an analysis? answer - any p-value, the distribution is uniform. So there is the same change of a p = .789 as p = .002 for example. Now, what about if there is a real effect? in this case our distribution shifts trending towards lower p-values (highly-significant or whichever terminology one prefers). If we plot this as a distribution we would expect something like the right hand graph in the figure below.

We may see a different pattern with a higher distribution of p-values at the high end, say .04-.05 or the "just-significant" line. This may be suggestive of p-hacking, optional stopping (running more participants until a significant result is found), amongst other things. See the left-hand graph in the image below. So, in short, if a literature is filled mainly with p-values at the high end of significance, e.g. lots of .04 or higher, then we might want to examine this research further to be more confident in the results.

Why is this awesome?

The p-curve analysis lecture supports the view that there is nothing inherently special about p < .05, especially in the higher range of these values. Indeed, a high-proportion of these results within a research literature is suggestive that the effect may not be as robust as believed. Note however that p-curve analysis is a relatively new technique and cannot actually diagnose p-hacking etc. There is a more in depth discussion of p-curve analysis in the course.

About the course as a whole

The format of the course is engaging. The lectures are short, and include quick multi-choice checks of understanding. This was a nice touch for me in particular as it did lead me to revisit a few ideas straight away, rather than waiting on the exams to realise my error. The quizzes/exams are exactly what they need to be to ensure that those taking the course have a good overall understanding of the lessons. The practical aspect of the course is a stand-out aspect as it gives students the opportunity to engage with the analyses and simulate lots of data to really drive the lesson home. It may also be a nice way to expose people to R initially, without any pressure on learning the language. The final assignment requires students to pre-register a small experiment and report it. This was very useful to me, especially as the course will hopefully instill in everybody the benefits of registration and open-science. It is possible to complete each week with a commitment of 2-3 hours, depending on the topic, so the time commitment is not huge.

There are many other useful and interesting insights offered by this course, too many to discuss here. It successfully covers controlling your alpha; increasing power; effect sizes; confidence intervals; and the differences between frequentist; likelihood; and Bayesian statistical approaches. We learn about the importance of open-science, pre-registration, equivalence testing and so much more. Most importantly, the course develops your ability to make inferences from your statistics and has left me with a desire to implement these lessons in my research. This course will help me do better science.

I hope that at the least this post is appreciated as a positive review and long-winded thank you to Prof. Lakens for the lessons in the course. More importantly, if it convinces one person in psychology to take the course and learn to better understand and make inferences from our and others statistical analyses, then the time spent writing this post has been well spent. Anybody interested, click here for the course homepage.

Any and all comments are appreciated on this first post as a return to blogging. Correct me if I have misquoted anything or misrepresented the information I have discussed.

Undergraduate Psychology degrees in the UK require the teaching of research methods and statistics. Usually, this is the module or course that gets a lot of hate for being dry (or hard). In order to actually understand research, I believe it may be the most important aspect of undergraduate psychology courses. In all fairness to the lecturers at the universities that I have attended, and I believe most others, it is made pretty clear that statistics are important. Of course we need a working understanding of statistics to understand and conduct research. Unfortunately, I believe that by somewhere around week 2 or 3 of running some new analysis that is not fully understood in SPSS, some of the motivation gets lost. This results in students lacking a full appreciation of the more important aspects of statistical analyses - interpreting statistics. Thankfully, this is where 'Improving your statistical inferences' comes in.

There is definitely improvement since my undergraduate. In the lab practicals that I have demonstrated in (and marked many reports from), the students seem to grasp the concept of interpreting statistics better than I did at that stage. Note: I was not number adverse, my strongest subject was always maths and there was plenty of statistics in A-level. These students even know to report effect sizes, which I had barely any comprehension of until my Masters. This is great progress, though judging from some of the reports I'm not completely convinced that these effect sizes have been interpreted or fully understood, other than knowing that they should be reported.

But, it is not the place of this post to rip on undergraduate statistics modules. I want to talk about the course "Improving your statistical inferences" taught by Daniel Lakens. In particular I want to highlight a few of the important lessons from this course that I wish I had discovered during my undergraduate. Side note: undergraduates, if you don't understand something that you think is important then it is also your responsibility to find that information - just because it is not in the lecture does not mean that it is not important, or even essential. On to two of the lessons.

Type 1 and Type 2 Errors

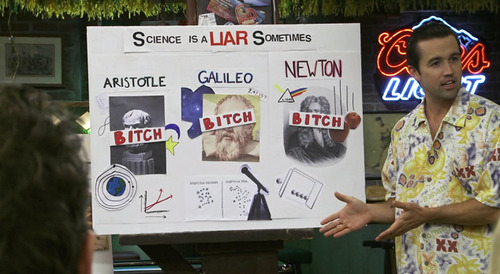

| |

| My wife didn't quite appreciate the reference. Any other it's always sunny fans out there? |

We all learned about type 1 and type 2 errors. What I now realise is that I did not quite appreciate how important they are. To recap, type 1 error (alpha) is the probability of finding a significant result when there is no real effect. Type 2 error (1 - power) is the probability that we find no significant result, even when there is a real effect. Or in the next picture...

Why is this important?

We know this, everybody is screaming. But, this underlies a key point in teaching of statistics.

In psychology we are trained that p < .05 is good, without complete appreciation for the importance of the actual effect in question. These lessons reinforce the notion that the effect size that is the important factor, rather than the p-value itself. This is of the utmost importance for those beginning their research journey, and I wish that I appreciated this during my undergraduate. Across the course, we learn about several methods to reduce these errors. Amongst other things, apriori methods such as upping your sample size help to control type 2 errors and apostiori methods such as controlling for multiple comparisons help to control type 1 errors.

P-curve analysis

p-curve analysis is a meta-analytic technique to explore distributions of p-values in the literature. It allows the analyst to draw inferences about the potential that a collection of statistical results contains p-hacking. Here is one question I did not know the answer to until taking this course. What p-value would we expect if there is no actual effect in an analysis? answer - any p-value, the distribution is uniform. So there is the same change of a p = .789 as p = .002 for example. Now, what about if there is a real effect? in this case our distribution shifts trending towards lower p-values (highly-significant or whichever terminology one prefers). If we plot this as a distribution we would expect something like the right hand graph in the figure below.

We may see a different pattern with a higher distribution of p-values at the high end, say .04-.05 or the "just-significant" line. This may be suggestive of p-hacking, optional stopping (running more participants until a significant result is found), amongst other things. See the left-hand graph in the image below. So, in short, if a literature is filled mainly with p-values at the high end of significance, e.g. lots of .04 or higher, then we might want to examine this research further to be more confident in the results.

|

Taken from Simonsohn et al.

http://www.haas.berkeley.edu/groups/online_marketing/facultyCV/papers/nelson_p-curve.pdf

|

The p-curve analysis lecture supports the view that there is nothing inherently special about p < .05, especially in the higher range of these values. Indeed, a high-proportion of these results within a research literature is suggestive that the effect may not be as robust as believed. Note however that p-curve analysis is a relatively new technique and cannot actually diagnose p-hacking etc. There is a more in depth discussion of p-curve analysis in the course.

About the course as a whole

The format of the course is engaging. The lectures are short, and include quick multi-choice checks of understanding. This was a nice touch for me in particular as it did lead me to revisit a few ideas straight away, rather than waiting on the exams to realise my error. The quizzes/exams are exactly what they need to be to ensure that those taking the course have a good overall understanding of the lessons. The practical aspect of the course is a stand-out aspect as it gives students the opportunity to engage with the analyses and simulate lots of data to really drive the lesson home. It may also be a nice way to expose people to R initially, without any pressure on learning the language. The final assignment requires students to pre-register a small experiment and report it. This was very useful to me, especially as the course will hopefully instill in everybody the benefits of registration and open-science. It is possible to complete each week with a commitment of 2-3 hours, depending on the topic, so the time commitment is not huge.

I hope that at the least this post is appreciated as a positive review and long-winded thank you to Prof. Lakens for the lessons in the course. More importantly, if it convinces one person in psychology to take the course and learn to better understand and make inferences from our and others statistical analyses, then the time spent writing this post has been well spent. Anybody interested, click here for the course homepage.

Any and all comments are appreciated on this first post as a return to blogging. Correct me if I have misquoted anything or misrepresented the information I have discussed.

Subscribe to:

Posts (Atom)